Safety with Agency:

Human-Centered Safety Filter with Application to AI-Assisted Motorsports

TL;DR

We propose a human-centered safety filter (HCSF) for shared autonomy that significantly enhances system safety without compromising human agency. Our HCSF is built upon a novel state–action control barrier function (Q-CBF) safety filter, which does not require any knowledge of the system dynamics for both synthesis and runtime safety enforcement.

Abstract

We propose a human-centered safety filter (HCSF) for shared autonomy that significantly enhances system safety without compromising human agency. Our HCSF is built on a neural safety value function, which we first learn scalably through black-box interactions and then use at deployment to enforce a novel state–action control barrier function (Q-CBF) safety constraint. Since this Q-CBF safety filter does not require any knowledge of the system dynamics for both synthesis and runtime safety monitoring and intervention, our method applies readily to complex, black-box shared autonomy systems. Notably, our HCSF's CBF-based interventions modify the human's actions minimally and smoothly, avoiding the abrupt, last-moment corrections delivered by many conventional safety filters. We validate our approach in a comprehensive in-person user study using Assetto Corsa—a high-fidelity car racing simulator with black-box dynamics—to assess robustness in “driving on the edge” scenarios. We compare both trajectory data and drivers’ perceptions of our HCSF assistance against unassisted driving and a conventional safety filter. Experimental results show that 1) compared to having no assistance, our HCSF improves both safety and user satisfaction without compromising human agency or comfort, and 2) relative to a conventional safety filter, our proposed HCSF boosts human agency, comfort, and satisfaction while maintaining robustness.

Contributions

- We introduce, to the best of our knowledge, the first fully model‑free control barrier function (CBF) safety filter. We learn a neural safety value function through interactions with a black‑box system and, at deployment, enforce a safety constraint based on a novel Q-CBF without any knowledge of system dynamics (e.g., control affine model). Both the synthesis and deployment of our Q‑CBF are scalable to high‑dimensional systems and do not require any knowledge of their dynamics.

- We build upon the learned Q‑CBF and demonstrate our HCSF in Assetto Corsa (AC), a high‑fidelity racing simulator with black‑box dynamics, where the filter is pushed to the limit against all potential failure modes by real human drivers with diverse skill levels. To the best of our knowledge, this is the first time a safety filter has been synthesized, deployed, and evaluated in such a high‑dimensional, dynamic shared autonomy setting involving human operators.

- We conduct an extensive in‑person user study with 83 human participants and conclude—with statistical significance in both trajectory data and human driver responses—that our HCSF considerably improves safety and user satisfaction without compromising human agency or comfort relative to having no safety filter. Furthermore, when compared to a conventional safety filter, our HCSF offers significant gains in human agency, comfort, and overall satisfaction while maintaining at least the same level of robustness—if not exceeding it.

Approach

Our HCSF builds upon a novel Q-CBF safety filter, which is, to the best of our knowledge, the first fully model-free CBF safety filter. We first prove that the safety value function is a valid discrete-time CBF. Then, we scalably learn a state–action safety value function through black-box interations with the system via model-free RL-based Hamilton-Jacobi (HJ) reachability analysis. At runtime, we leverage the learned state–action safety value function to enforce a novel Q-CBF safety constraint, which does not require any knowledge of the system dynamics for both safety monitoring and intervention. Therefore, our Q‑CBF safety filter is fully model‑free—from synthesis through deployment—unlocking a wide range of applications for complex, black‑box systems.

During deployment, our HCSF solves an optimal control problem (OCP) at each timestep to compute a safe action that minimally deviates from the human's intended action while satisfying the Q-CBF safety constraint. Thus, our HCSF actively promotes human agency while ensuring the system remains within the maximal safe set. Whenever an intervention occurs, our HCSF provides visual cues that reflect the intervention magnitude at each input channel, fostering transparent collaboration with the human operator.

Results

We conducted a large-scale user study in an AI-assisted car racing environment using AC to assess the robustness of our HCSF and how human operators perceive its interventions. We compare our HCSF against unassisted driving and a last resort safety filter (LRSF)—a conventional value-based safety filter that switches to the fallback policy when the current state is deemed unsafe. Both trajectory data and the users' subjective responses were used to validate our hypotheses:

- compared to unassisted driving, our HCSF improves safety and satisfaction without compromising human agency and comfort.

- compared to LRSF, our HCSF enhances human agency, comfort, and satisfaction while maintaining robustness.

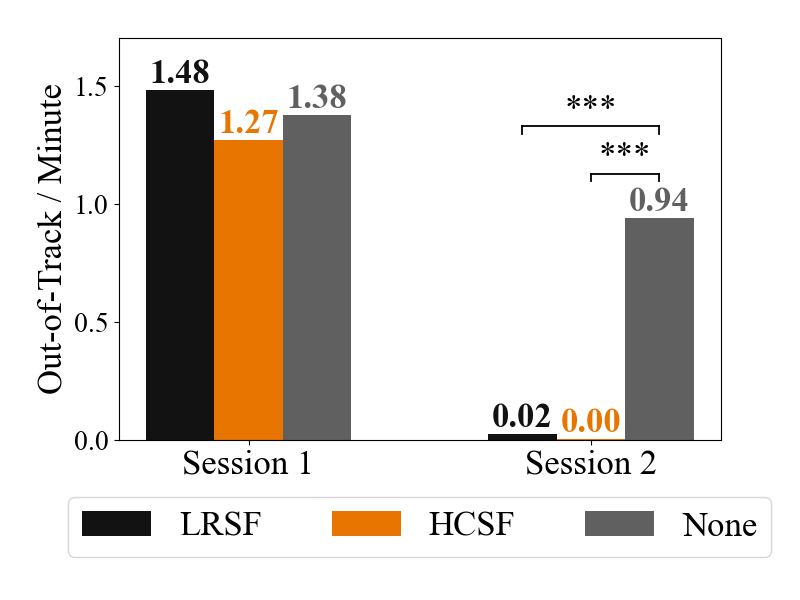

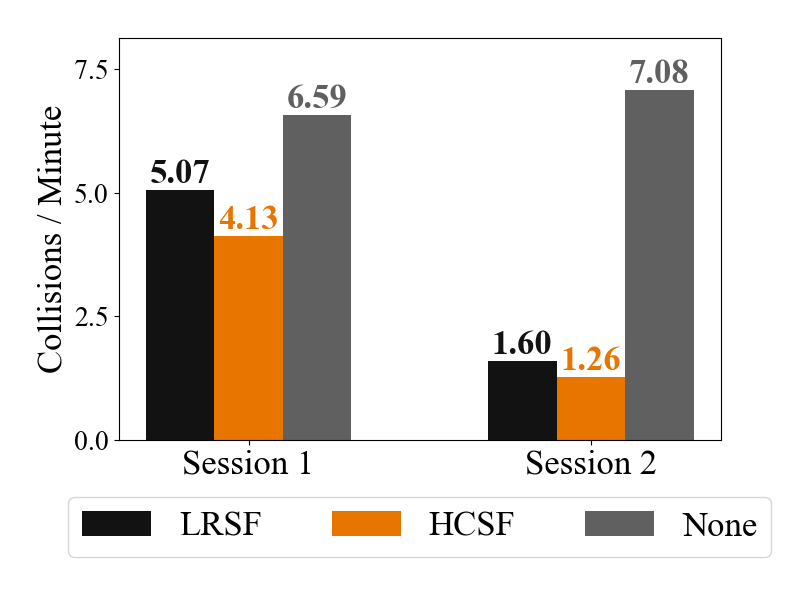

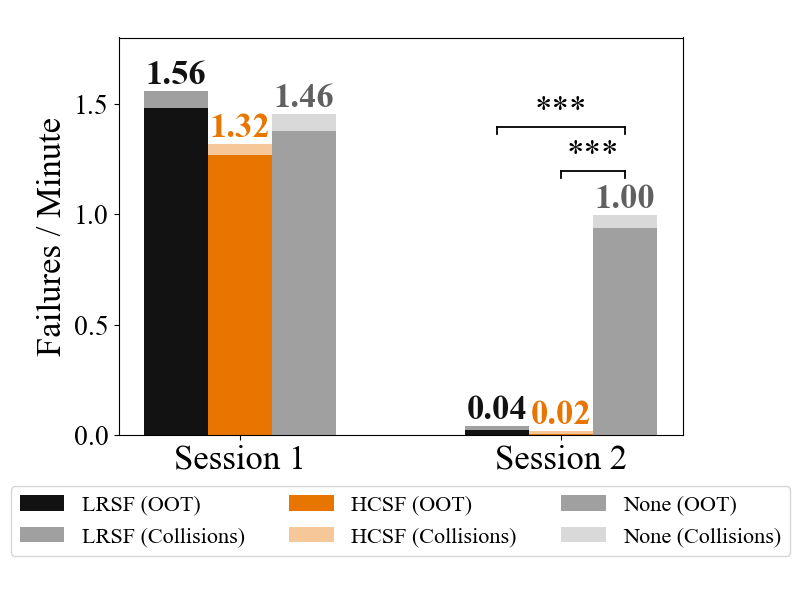

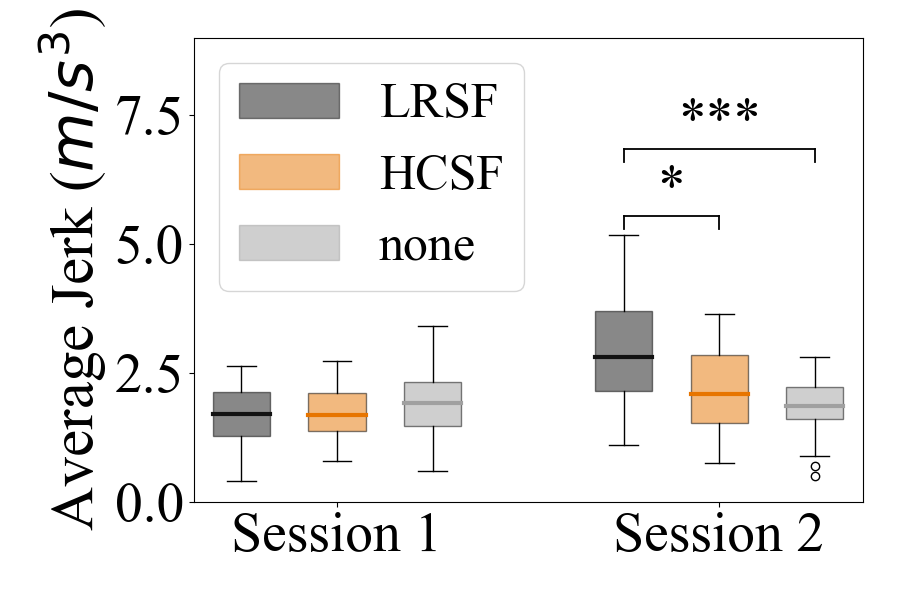

Our HCSF achieved near-zero failures in session 2 for all participants in the group, demonstrating significant enhancement in safety compared to unassisted driving. Although our HCSF outperformed LRSF in both failure modes (i.e., out-of-track incidents and collisions), the differences were not statistically significant. In the figures below, statistical significance is indicated by asterisks (* corresponds to p < 0.05; ** to p < 0.01; *** to p < 0.001).

A similar trend emerges in the user responses as well. Participants assisted by our HCSF in session 2 felt significantly more confident in the safety filter's robustness than those in the unassisted group. Although participants in the HCSF group also reported higher confidence than those in the LRSF group, this difference was not statistically significant.

The histogram below reports the distribution of I.M. (input modification magnitude) among all participants in each group across all timesteps—including those when no safety filter intervention occured—for the HCSF and LRSF groups, thus jointly capturing intervention frequency and magnitude. Our HCSF reduces the frequency of large interventions relative to LRSF, so the filtered actions remain closer to the human operator's intended actions. In other words, our HCSF enhances human agency over LRSF by offering human-centered nudges of smaller magnitude. By contrast, LRSF does not take into account the human action, often leading to unnecessarily large corrections that undermine the human's sense of control.

Qualitative responses from participants also strongly support the improved human agency of our HCSF over LRSF. As shown in the box plot below, participants assisted by our HCSF during session 2 reported a significantly stronger sense of being in control compared to those assisted by LRSF. Meanwhile, the unassisted group recorded the highest average agency score—unsurprising because they had full control of the vehicle in all sessions—but its difference from the HCSF group was not statistically significant.

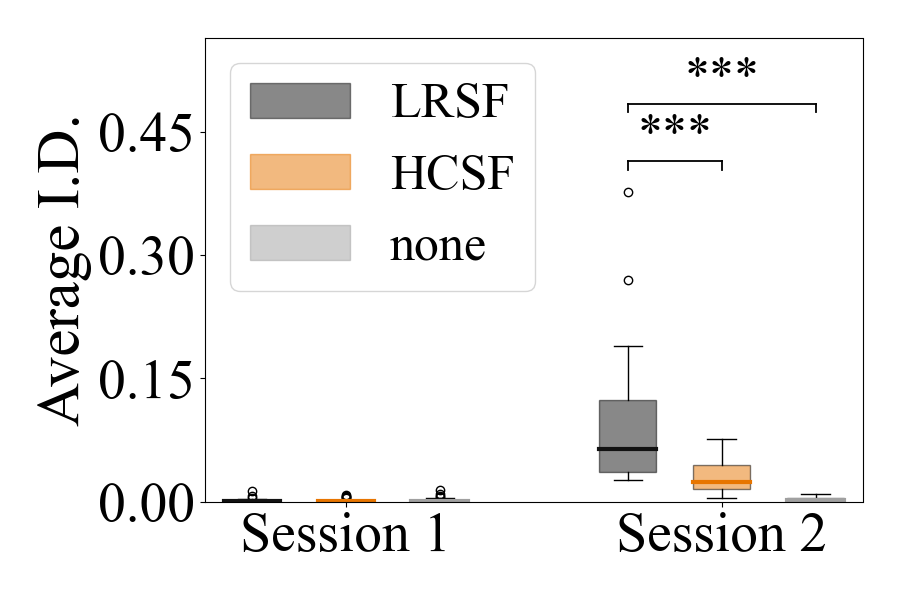

The box plots below show the distributions of average I.D. (squared magnitude of the first-order control input difference) and jerk for all three groups. Notably, our HCSF significantly reduces both average I.D. and jerk compared to LRSF, while its difference from unassisted driving is not statistically significant. This indicates that our HCSF's interventions are smoother and less abrupt than those of LRSF, leading to improved user comfort. We conjecture that our HCSF's smooth interventions are due to 1) the Q-CBF safety constraint that delivers more gradual interventions compared to the swithcing behavior of LRSF, and 2) our HCSF anchoring the filtered action around the human's action, which is inherently smooth and continuous.

Participants' reported sense of smoothness also supports improved user comfort with our HCSF over LRSF. As shown in the box plot below, participants assisted by our HCSF reported a significantly higher smoothness score in session 2 compared to those assisted by LRSF. Similar to the agency results, the unassisted group exhibited the highest average smoothness score, which is unsurprising given the absence of interventions of any sort. Nonetheless, we emphasize that the gap between the HCSF and unassisted groups was not statsistically significant, showing how well our HCSF preserves a smooth and comfortable driving experience.

Finally, participants assisted by our HCSF in session 2 reported significantly higher satisfaction scores than those in the unassisted and LRSF groups.

Therefore, we validated both hypotheses using both trajectory data and human participant responses from an extensive human study.

Citation

@inproceedings{oh2025safety,

title={Safety with Agency: Human-Centered Safety Filter with Application to AI-Assisted Motorsports},

author={Oh, Donggeon David and Lidard, Justin and Hu, Haimin and Sinhmar, Himani and Lazarski, Elle and Gopinath, Deepak and Sumner, Emily S and DeCastro, Jonathan A and Rosman, Guy and Leonard, Naomi Ehrich and and Fisac, Jaime Fern{\'a}ndez},

booktitle={Proceedings of Robotics: Science and Systems},

year={2025}

}